Implementation

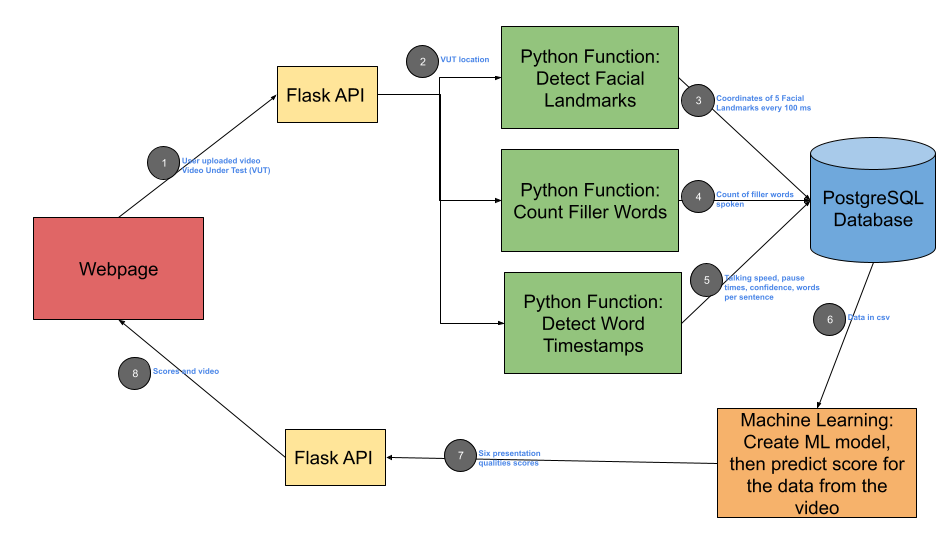

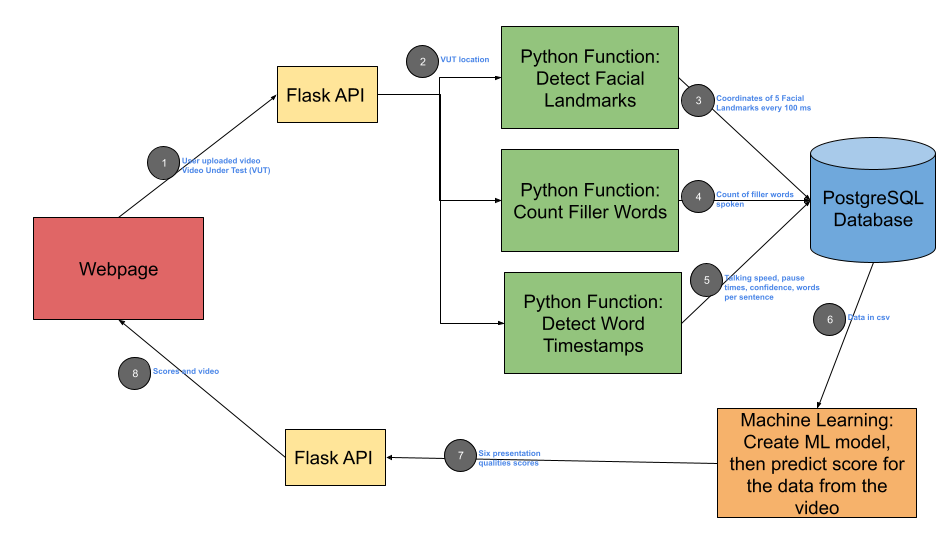

System architecture showing the flow from video input to scoring output

Public speaking is a fear that about 74% of people share. However, public speaking has a tremendous impact on our lives. People who are better speakers are generally more successful and have happier lives.

The challenge is: How can a computer analyze the quality of a speech automatically?

Speech Trainer is a machine learning-based software application that provides users with comprehensive feedback scores to improve their presentation skills.

By analyzing six key presentation qualities, we help users develop confidence and effectiveness in public speaking.

I researched several TED talk videos and noticed that verbal delivery and body language are very important to make a presentation effective. From this research, I identified six key presentation qualities that significantly impact speech effectiveness:

System architecture showing the flow from video input to scoring output